Intro

In the current era, businesses are focusing on optimizing IT infrastructure workloads adopting cloud models like AWS, Azure, GCP and OCI. Cloud environments provide many on-demand resources which enable organizations to unleash their full potential to expand their market share.

Also moving to the cloud enables greater flexibility to control IT infrastructure costs. In any organization, major workloads are coming from databases. Cloud platforms have many services to get a clear understanding of cost predictions for heavy workloads.

When moving to the cloud it's really important to compare costs and the features each cloud provides. Microsft Azure came to the cloud in an early stage and it is a strong platform to migrate application and database workloads. Moreover, Migrating databases to azure is challenging and the ongoing market is really hot.

Many businesses want to have multiple test environments before moving to prod changes. It's important to create custom images and build an environment quickly and efficiently. In this article, I will cover how we can create an oracle custom snapshot to create a VM.

Let's start with VM creation.

Create a VM in azure

There are two options setup an administrator password.

- Setup user and a strong password.

- Use generated the ssh public key

As this is oracle ASM (standalone installation ) I have added 4 disks including 2 disks for DATA (external redundancy) and one FRA.

As this is a test I'm using public IP address. Make sure to create Virtual-network then only you can select this Virtual network.

The last step for VM creation check validation is passed.

Partitioning of disk and mount /u01

Execute lsblk to view all the attached disks and use fdisk commands to partition the disk. Once you format the partition disk to the ext4 file system make sure to add this to the disk to the partition table by executing partprob. Do not miss this step, if you missed this VM will not come up and will hand on the boot section.

These are the commands you needs to be execute to add partition to vm.

##### correct commands

fdisk /dev/sdc1

mkfs.ext4 /dev/sdc1

partprobe /dev/sdc1

blkid

mkdir /u01

mount -a -- after adding this disk blkid to fstab

After creating a partition , you can use lsblk to validate partition

[azuser@localhost ~]$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 30G 0 disk

├─sda1 8:1 0 800M 0 part /boot

├─sda2 8:2 0 28.7G 0 part

│ ├─rootvg-rootlv 252:0 0 18.7G 0 lvm /

│ └─rootvg-crashlv 252:1 0 10G 0 lvm /var/crash

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 495M 0 part /boot/efi

sdb 8:16 0 75G 0 disk

└─sdb1 8:17 0 75G 0 part /mnt

sdc 8:32 0 128G 0 disk

└─sdc1 8:33 0 128G 0 part

sdd 8:48 0 512G 0 disk

└─sdd1 8:49 0 512G 0 part

sde 8:64 0 512G 0 disk

Sample blkid output

Execute partprobe command to add a disk to the partition table and use mkfs.ext4 to format the disk. As the last step execute blkid to get the respective block id .

[root@oradb-01 ~]# blkid

/dev/mapper/rootvg-crashlv: UUID="cdf585f6-703a-4aef-b8de-404c9883a58e" BLOCK_SIZE="512" TYPE="xfs"

/dev/sde2: UUID="Z1I6oC-RWrU-DQPh-tkiS-VZP3-aGNb-khAaSc" TYPE="LVM2_member" PARTUUID="7239f924-9e5f-406f-b5b7-88862e86ea8d"

/dev/sde1: UUID="c5d1e180-5e1d-43c6-9ed6-c8ac37cb8061" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="83c7509b-9398-4ca4-847a-59f03e4f9577"

/dev/sde14: PARTUUID="1833132d-49f6-4ae0-861a-30eda86be687"

/dev/sde15: SEC_TYPE="msdos" UUID="3503-1054" BLOCK_SIZE="512" TYPE="vfat" PARTLABEL="EFI System Partition" PARTUUID="0c2aee3b-7c66-42e5-91b3-52a8d2d7db16"

/dev/sdf1: UUID="06cd70bc-adec-4ab9-951e-eada6e45941d" BLOCK_SIZE="4096" TYPE="ext4" PARTUUID="619336a7-01"

/dev/sda1: UUID="6f9f562e-afe1-423e-8846-9ab001150f7c" BLOCK_SIZE="4096" TYPE="ext4" PARTUUID="727ac55d-01"

/dev/mapper/rootvg-rootlv: UUID="c866304a-09ef-4c3b-87cf-00f321a52356" BLOCK_SIZE="512" TYPE="xfs"

[root@oradb-01 ~]#

lsblk output mount /u01

[azuser@localhost ~]$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 30G 0 disk

├─sda1 8:1 0 800M 0 part /boot

├─sda2 8:2 0 28.7G 0 part

│ ├─rootvg-rootlv 252:0 0 18.7G 0 lvm /

│ └─rootvg-crashlv 252:1 0 10G 0 lvm /var/crash

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 495M 0 part /boot/efi

sdb 8:16 0 75G 0 disk

└─sdb1 8:17 0 75G 0 part /mnt

sdc 8:32 0 256G 0 disk

└─sdc1 8:33 0 256G 0 part /u01

sdd 8:48 0 512G 0 disk

└─sdd1 8:49 0 512G 0 part

sde 8:64 0 512G 0 disk

Azure documentation is very well organized and crystal clear on ASM installation steps. Please find the below mention links from azure knowledge base for asm installation.

https://docs.microsoft.com/en-us/azure/virtual-machines/workloads/oracle/configure-oracle-asm

Installed required RPMs for Oracle 19c database

Download and install oracle-preinstall-19c rpm and installed it on the server.

curl -o oracle-database-preinstall-19c-1.0-1.el7.x86_64.rpm https://yum.oracle.com/repo/OracleLinux/OL7/latest/x86_64/getPackage/oracle-database-preinstall-19c-1.0-1.el7.x86_64.rpm

yum -y localinstall oracle-database-preinstall-19c-1.0-1.el7.x86_64.rpm

Installed required RPMs for oracle 19c grid

First installed oracle-database-preinstalled-19c rpm , Once the preinstalled is complete, Make sure to install required oracleasm RPMs , we need these RPMs for asm disk configuration.

[root@oradb-01 ~]# rpm -qa |grep oracleasm

kmod-redhat-oracleasm-2.0.8-12.2.0.1.el8.x86_64

oracleasm-support-2.1.12-1.el8.x86_64

oracleasmlib-2.0.17-1.el8.x86_64

[root@oradb-01 ~]#

Add required groups and users for the installation. Creating only oracle user for both grid and db installation

groupadd -g 54345 asmadmin

groupadd -g 54346 asmdba

groupadd -g 54347 asmoper

useradd -u 3000 -g oinstall -G dba,asmadmin,asmdba,asmoper oracle

usermod -g oinstall -G dba,asmdba,asmadmin,asmoper oracle

Configure ASM

After installing the asm , configure the ASM library for server startup.

[root@oradb-01 ~]# oracleasm configure -i

Configuring the Oracle ASM library driver.

This will configure the on-boot properties of the Oracle ASM library

driver. The following questions will determine whether the driver is

loaded on boot and what permissions it will have. The current values

will be shown in brackets ('[]'). Hitting ENTER without typing an

answer will keep that current value. Ctrl-C will abort.

Default user to own the driver interface []: oracle

Default group to own the driver interface []: asmadmin

Start Oracle ASM library driver on boot (y/n) [n]: Y

Scan for Oracle ASM disks on boot (y/n) [y]: Y

Writing Oracle ASM library driver configuration: done

[root@oradb-01 ~]#

Once the configuration is complete validate the asm status, Make sure the status is correct after restarting the oracleasm service, If there are any errors this going to impact on server startup.

Validate server status

[root@oradb-01 ~]# oracleasm status

Checking if ASM is loaded: yes

Checking if /dev/oracleasm is mounted: yes

[root@oradb-01 ~]#

Validate from systemctl

[root@oradb-01 ~]# systemctl start oracleasm

[root@oradb-01 ~]# systemctl status oracleasm

● oracleasm.service - Load oracleasm Modules

Loaded: loaded (/usr/lib/systemd/system/oracleasm.service; enabled; vendor preset: disabled)

Active: active (exited) since Thu 2022-06-23 17:23:51 UTC; 5s ago

Process: 16982 ExecStart=/usr/sbin/oracleasm.init start_sysctl (code=exited, status=0/SUCCESS)

Main PID: 16982 (code=exited, status=0/SUCCESS)

Jun 23 17:23:50 oradb-01 systemd[1]: Starting Load oracleasm Modules...

Jun 23 17:23:50 oradb-01 oracleasm.init[16982]: Initializing the Oracle ASMLib driver: OK

Jun 23 17:23:51 oradb-01 oracleasm.init[16982]: Scanning the system for Oracle ASMLib disks: OK

Jun 23 17:23:51 oradb-01 systemd[1]: Started Load oracleasm Modules.

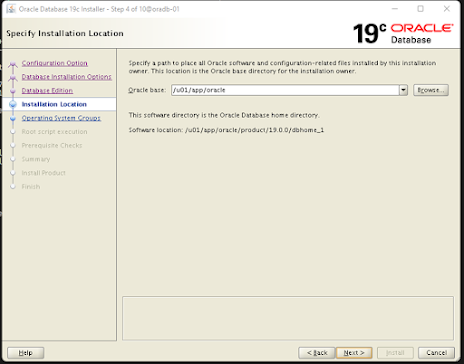

Create a directory for grid and DB installation

mkdir -p /u01/app/19.0.0.0/grid

mkdir -p /u01/app/oracle/product/19.0.0/dbhome_1

chown oracle:oinstall /u01/app/19.0.0.0/grid

chown oracle:oinstall /u01/app/oracle/product/19.0.0/dbhome_1

19C grid installation

Expected root.sh outputs.

[root@oradb-01 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@oradb-01 ~]# /u01/app/19.0.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/19.0.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

To configure Grid Infrastructure for a Cluster or Grid Infrastructure for a Stand-Alone Server execute the following command as oracle user:

/u01/app/19.0.0.0/grid/gridSetup.sh

This command launches the Grid Infrastructure Setup Wizard. The wizard also supports silent operation, and the parameters can be passed through the response file that is available in the installation media.

[root@oradb-01 ~]#

DB Installation

Create a Snapshot of the disk

- OS disk

- Oracle binary installation disk